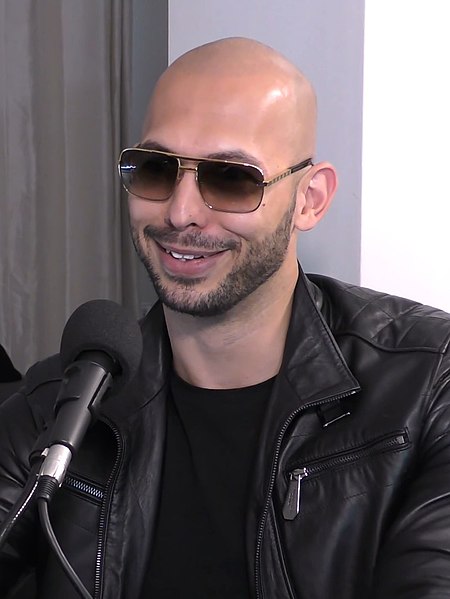

Andrew Tate; The Sweet Spot

September 23, 2022

Social media has become a staple in society as a source of news, information, and entertainment. It has also become a vicious battleground of ideologies, personal opinions, and more. Social media has made it crystal clear that what one individual holds as true and good might be found offensive by others. Social media companies themselves play a role in such an ideological struggle by suppressing many ideas deemed “harmful” or “offensive.” In a country where freedom of speech is valued highly, many believe that attempts by social media companies to suppress certain opinions is an attack on free speech. Consequently, prominent social media platforms find themselves caught in the middle of the struggle. Should they dictate what can and can not be said on their respective platforms, or should they allow any user free reign to speak one’s mind, regardless of factual basis or harmful rhetoric?

The answer is not a simple “yes” or “no.” There is a middle ground, somewhere between freedom and total control, where the answer lies. Now, should companies exert some control over the content its users share? The answer is simple—yes. Users spreading information or theories that lack any sort of factual basis should not be allowed to do so, especially if those ideas prove harmful to the health and safety of other users who take the information as fact. When such information is expressed, many companies flag the post as misleading or factually incorrect. They do not remove it but simply qualify it if the user has not already done so. In such situations, users twist an opinion into a fact, though it has no foundation other than the user’s personal belief. One is entitled to one’s opinion, yes, but one is not entitled to advertise such an opinion as fact. In a space such as the Internet, misinformation is able to travel at high speeds, potentially endangering uninformed readers. Such posts are hard to control, as users should be allowed to speak their mind. Companies flagging posts for lack of factual basis is a good solution. Users are allowed to share an opinion, but others should not be misled by their posts. This move is still controversial, but it is well within the companies’ rights and is more a move toward public safety more than some private agenda. Those whose posts are flagged claim the act is an infringement on their free speech, but even if this were true it would be irrelevant. Users agree to terms and conditions set by the platform, thus allowing the company to do as they please. Hate speech, slander, misinformation, and more are actionable offenses. So, while users may FEEL their rights are violated, by using a platform they agree to the terms and conditions of the company, opening themselves up to action by the company should they be found breaking the rules.

However, it can be hard to define what constitutes “hate speech” or “misinformation.” Certainly, there are many obvious examples. Racist attacks, harmful prejudices, and factually disproven theories are just a few. Yet, there is a grey area, and social media companies trying to navigate it face backlash no matter what they decide to do. Do they ban someone they feel is perpetuating dangerous information or harmful attacks and face backlash from that person’s supporters? Or do they allow the user to stay and face backlash from those that feel offended? It is a tricky position, and companies are trying to find a middle ground that some believe does not exist. Right now, companies are trying to please as many users as possible. While a good idea, it is simply impossible to please everyone, and catering to a large crowd can end up making things worse. Many feel that companies are “bullied” by the majority to take action against an undeserving user. Andrew Tate, Donald Trump, and more are examples of bans that divided the Internet. These instances make the public question the extent of companies’ policies regarding free speech. Many believe that companies are tightening their grip too much, while some feel they have not gone far enough.

Companies need to find a middle ground where people still feel free to speak their mind while also not overstepping and misusing that freedom. Users, too, need to learn how to coexist with those that may not share the same view. It cannot be left up to the companies alone, or nothing will ever change. Refusal to cooperate with one another forces companies’ hands into making decisions that are guaranteed to anger large numbers of people. While companies must struggle to find a middle ground between lax rules and heavy-handed decisions, users must also find a way to work together to promote a better, healthier online environment.